AstonJ

Mac users - any unaccounted 'used' disk space on your drives?

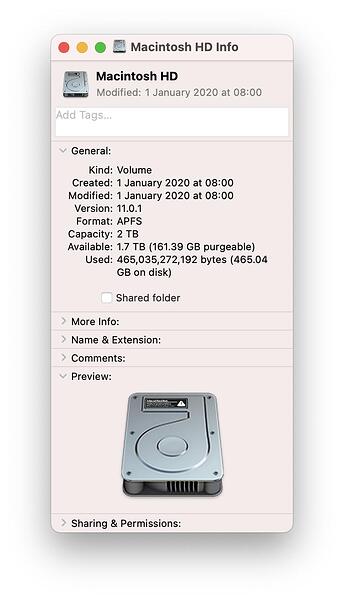

I’m not sure if this is a Big Sur issue or whether I’ve just noticed it, but on looking at my hard drive usage it says I have used 465.03 GB:

On that disk there is:

- Applications (41.18 GB)

- Library (2.89 GB)

- System (24.89 GB)

- Users (225.59 GB)

= 294.55 GB

…which leaves 170.48 GB unaccounted for.

I’ve looked at hidden files on the root level too, but they’re just 5 GB or so, so that still leaves 165 GB of disk space that seems to have vanished into thin air ![]()

What’s the situation on your drive?

(You can select a folder/drive and press ‘CMD + I’ to show info (you can also select a number of folders and do the same.)

Marked As Solved

AstonJ

They’ve just got back to me and asked me to run:

tmutil deletelocalsnapshots /System/Volumes/Data

Which has dropped my used space to 345.76 GB

And separately:

- 46.83 Applications

- 1.21 GB - Library

- 28.16 GB - System

- 268.82 - Users

Looks like you were right about the snapshots @NobbZ

Marking the as the solution in case it helps anyone else in future…

Also Liked

ohm

What does a

$ diskutil list

give you?

NobbZ

I’m not a mac user, though it says something about ~160GiB beeing purgeable.

How does it usually account for backups?

Also it seems as if APFS supports snapshots, how are those accounted?

As a ZFS user who uses snapshotting a lot in automated ways, I usually do not see the snapshots in a filemanager, but only by using the specialised ZFS tools.

Lets say du -sh ~ tells me that ~ were 230 GB, df -h ~ only says 209G due to compression, while a zfs list tells me this:

$ zfs list -o name,used,available,referenced,compressratio rpool/safe/home/nmelzer

NAME USED AVAIL REFER RATIO

rpool/safe/home/nmelzer 302G 72.5G 209G 1.18x

As you can see, this subvolume refers to 209 G directly, while it has an overall size of 302G. The additional ~80G come from differences in the snapshots I keep for that volume.

Perhaps something similar is going on for your APFS?

NobbZ

Windows “Snapshots” are totally different, they are just files copied from one location to another. Snapshots on the filesystem level are totally different from that, They mark blocks on your harddisk as immutable and will only change a copy of them and pretend the immutable copy has been overwritten, but still keep a reference to it.

You can then either drop those old references or roll back to them.

On BTRFS or ZFS I can create a snapshot worth 500 GiB of data in split seconds, and then copy this snapshot over to my backup system. And the nice thing is, the system can stay active in that time.

Lets consider a postgres or mysql database, usually if you just do a cp of the data folder and move use it as backup, then the database itself will probably be corrupted due to the serialised copy. eg. the index file has been copied after the table itself, and the index has been changed since then.

The snapshout of BTRFS and ZFS though is atomic. All files are fixed in time. If you snapshot and copy this snapshot as a backup, you can restart a database using that snapshot, and it will recover from it similar to how it would recover from a power outage, as all files still are coherent in their state.

Popular Macos topics

Other popular topics

Categories:

Sub Categories:

Popular Portals

- /elixir

- /rust

- /wasm

- /ruby

- /erlang

- /phoenix

- /keyboards

- /python

- /js

- /rails

- /security

- /go

- /swift

- /vim

- /clojure

- /emacs

- /java

- /haskell

- /svelte

- /onivim

- /typescript

- /kotlin

- /c-plus-plus

- /crystal

- /tailwind

- /react

- /gleam

- /ocaml

- /elm

- /flutter

- /vscode

- /ash

- /html

- /opensuse

- /zig

- /centos

- /deepseek

- /php

- /scala

- /react-native

- /lisp

- /sublime-text

- /textmate

- /nixos

- /debian

- /agda

- /django

- /deno

- /kubuntu

- /arch-linux

- /nodejs

- /revery

- /ubuntu

- /spring

- /manjaro

- /diversity

- /lua

- /julia

- /markdown

- /slackware